|

|

||||||||||||||||||

Saliency-Based Feature Selection Strategy in Stereoscopic Panoramic Video Generation

Authors: Wang, H., Sandin, D. J., Schonfeld, D.

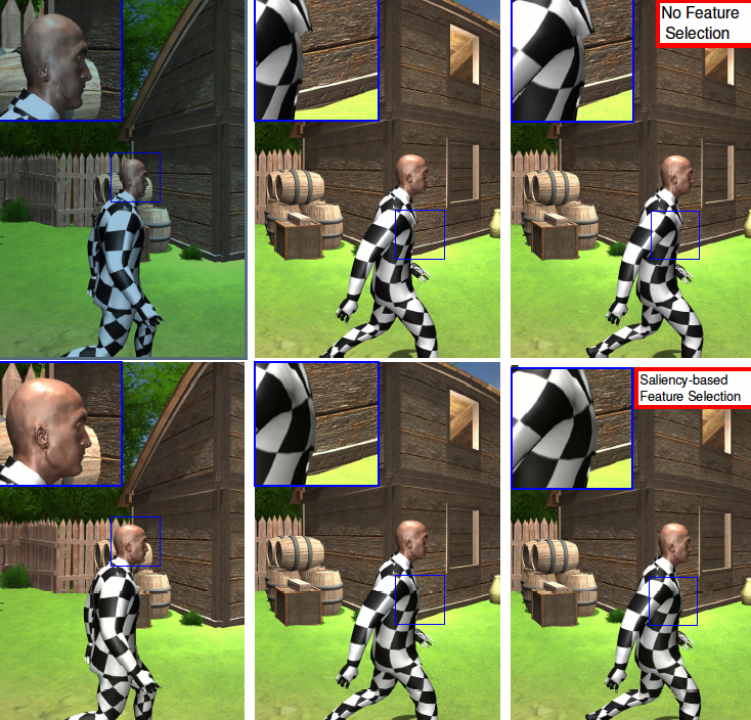

Publication: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, Canada URL: http://dx.doi.org/10.1109/ICASSP.2018.8461649 In this paper, we present one saliency-based feature selection and tracking strategy in the feature-based stereoscopic panoramic video generation system. Many existing stereoscopic video composition approaches aim at producing high-quality panoramas from multiple input cameras; however, most of them directly operate image alignment on those originally detected features without any refinement or optimization. The standard global feature extraction threshold always fails to guarantee stitching correctness of all human interested regions. Thus, based on the originally commonly identified feature set, we incorporate the saliency map into the distribution of control points to remove the redundancy in texture-rich regions and ensure the adequacy of selected features in visual sensitive regions. Intuitively, under the guidance of saliency change in the video sequence, one grid-based feature updating strategy is operated between consecutive frames instead of the standard global feature updating. The experiments show that our method can improve the stitching quality of visual important region without impairment to the human less-interested regions in the generated stereoscopic panoramic video. Index Terms: Stereoscopic Panoramic Video, Commonly-identified Feature, Saliency Map, Visual Sensitive Date: April 15, 2018 - April 20, 2018 Document: View PDF |