|

|

||||||||||||||||||

Learning Global Pose Features in Graph Convolutional Networks for 3D Human Pose Estimation

Authors: Liu, K., Zou, Z., Tang, W.

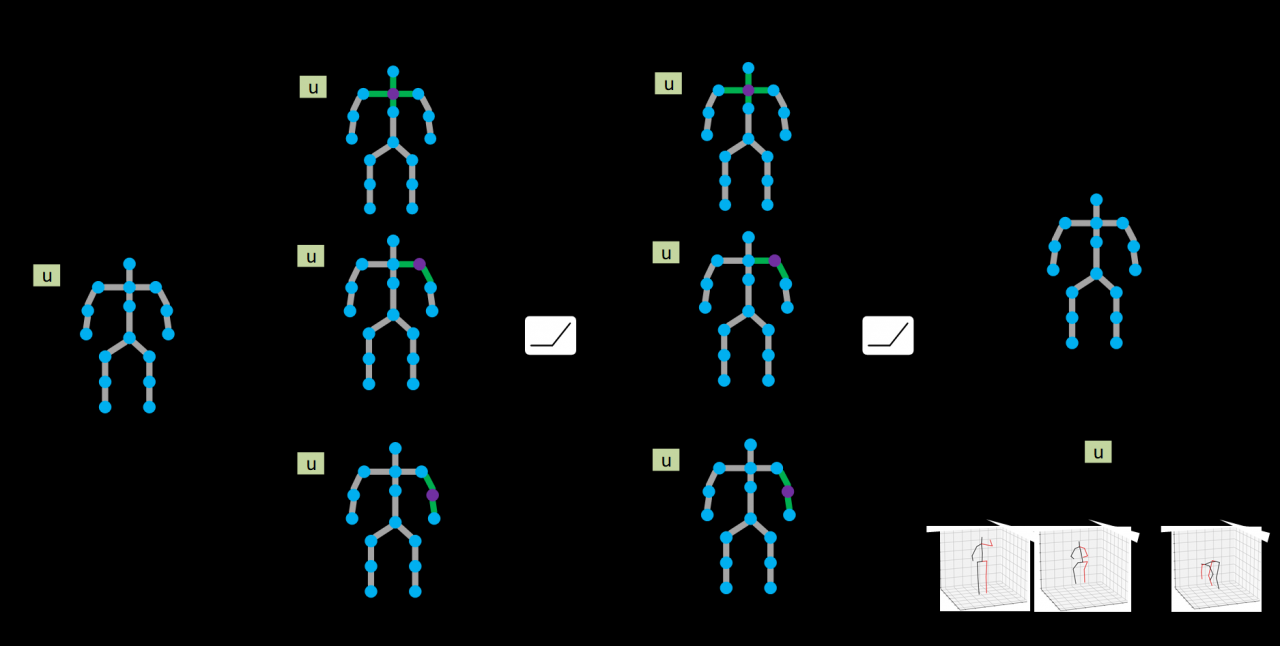

Publication: In Proceedings of the Asian Conference on Computer Vision (ACCV) 2020, Virtual Kyoto URL: https://openaccess.thecvf.com/content/ACCV2020/papers/Liu_Learning_Global_Pose_Features_in_Graph_Convolutional_Networks_for_3D_ACCV_2020_paper.pdf As the human body skeleton can be represented as a sparse graph, it is natural to exploit graph convolutional networks (GCNs) to model the articulated body structure for 3D human pose estimation (HPE). However, a vanilla graph convolutional layer, the building block of a GCN, only models the local relationships between each body joint and their neighbors on the skeleton graph. Some global attributes, e.g., the action of the person, can be critical to 3D HPE, especially in the case of occlusion or depth ambiguity. To address this issue, this paper introduces a new 3D HPE framework by learning global pose features in GCNs. Specifically, we add a global node to the graph and connect it to all the body joint nodes. On one hand, global features are updated by aggregating all body joint features to model the global attributes. On the other hand, the feature update of each body joint depends on not only their neighbors but also the global node. Furthermore, we propose a heterogeneous multi-task learning framework to learn the local and global features. While each local node regresses the 3D coordinate of the corresponding body joint, we force the global node to classify an action category or learn a low-dimensional pose embedding. Experimental results demonstrate the effectiveness of the proposed approach. Funding: The COMPaaS DLV project (NSF award CNS-1828265) Date: November 30, 2020 - December 4, 2020 Document: View PDF |