|

|

||||||||||||||||||

Interaction with Multiple Data Visualizations Through Natural Language Commands

Authors: Aurisano, J.

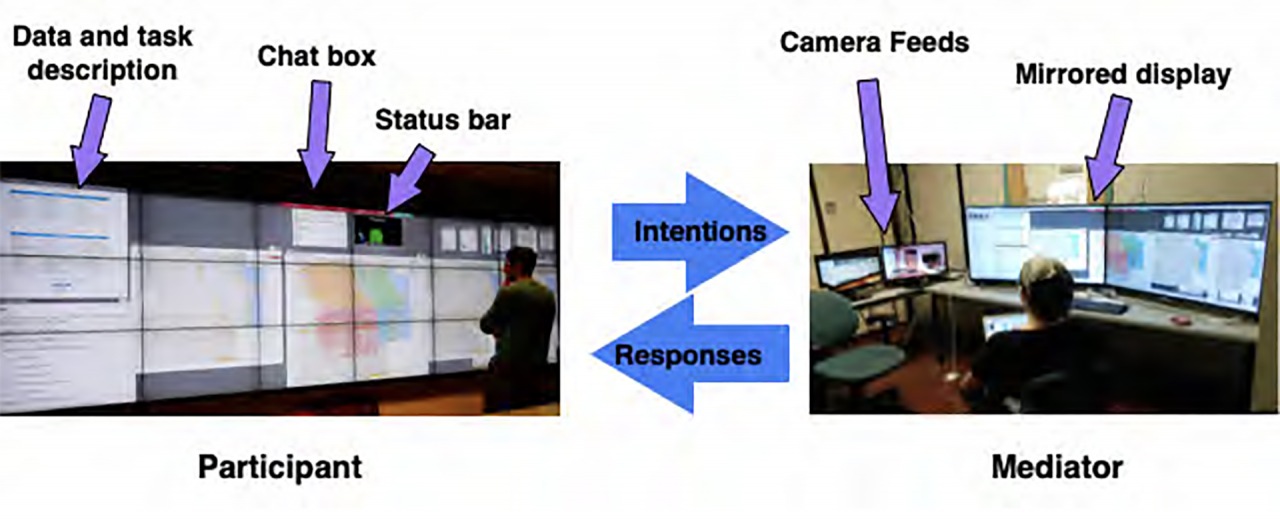

Publication: Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science, Graduate College of the University of Illinois Chicago Data exploration stands to benefit from environments that permit users to examine and juxtapose many views of data, particularly views that present diverse selections of data values and attributes. Large, high-resolution environments are capable of showing many related views of data, but efficiently creating and displaying visualizations in these environments presents significant challenges. In this dissertation, I will present my research on “multi-view data exploration interactions” that enable users to create sets of views with coherent data value and attribute variations, through multi-modal speech and mid-air pointing gestures in large display environments. This work enables users to rapidly and efficiently generate sets of views in support of multi-view data exploration tasks, organize these views in coherent collections, and operate on sets of views collectively, rather than individually, to efficiently reach large portions of the ‘data and attribute space’. I will present three contributions: 1) an observational study of data exploration in a large display environment with speech and mid-air gestures, 2) ‘Traverse’, an interaction technique for data exploration, based on this study, which uses natural language to create and pivot sets of views, and 3) ‘Ditto’, a multi-modal speech and mid-air pointing gesture interactive environment, which utilizes the multi-view data exploration technique, in large display environments. Date: November 1, 2021 Document: View PDF |